LLMs in Practice

What you'll learn

The CBX experience delivers outcomes that matter at scale.

In-Depth Hallucination Analysis

Bias in LLMs

Performance Evaluation Techniques

Career Insights in LLM Engineering

Improving LLM Accuracy

Advanced Techniques for Controlling LLM Outputs

Skills you'll gain

Packed with useful features

The CBX experience delivers outcomes that matter at scale.

Understanding Hallucinations in LLMs

Understand what hallucinations are in large language models, why they occur, and how factors such as training data, model architecture, and prompting methods contribute to incorrect or fabricated outputs.

Mitigating Bias in LLMs

Learn what bias in large language models is, how it originates, and how it impacts outputs. Understand practical ways to identify and reduce bias using responsible data, evaluation, and prompting techniques.

Evaluating LLM Performance

Key metrics for assessing LLMs, including perplexity, cross-entropy, and extrinsic benchmarks like GLUE, SuperGLUE, BIG-bench, HELM, and FLASK.

Fine-Tuning Large Language Models

Learn how large language models are fine-tuned for specific tasks using domain data and instruction-based training. Understand when fine-tuning is needed, its benefits, limitations, and how it improves model performance for real-world applications.

Controlling LLM Outputs

Learn how to control large language model outputs using techniques such as structured prompting, role definition, constraints, and evaluation methods to ensure responses are accurate, consistent, and aligned with intended goals across different applications.

# Endless features

Meet your Mentors

Aditya Chhabra

Aditya brings 8+ years of AI engineering experience from Microsoft and leading startups, specializing in ChatGPT, generative AI, and LLM deployment. He transforms complex concepts into practical skills, mentoring hundreds of Delhi NCR engineers to master production-ready AI systems. As lead instructor at CBX, Aditya focuses on hands-on projects and real-world expertise that accelerates career growth.

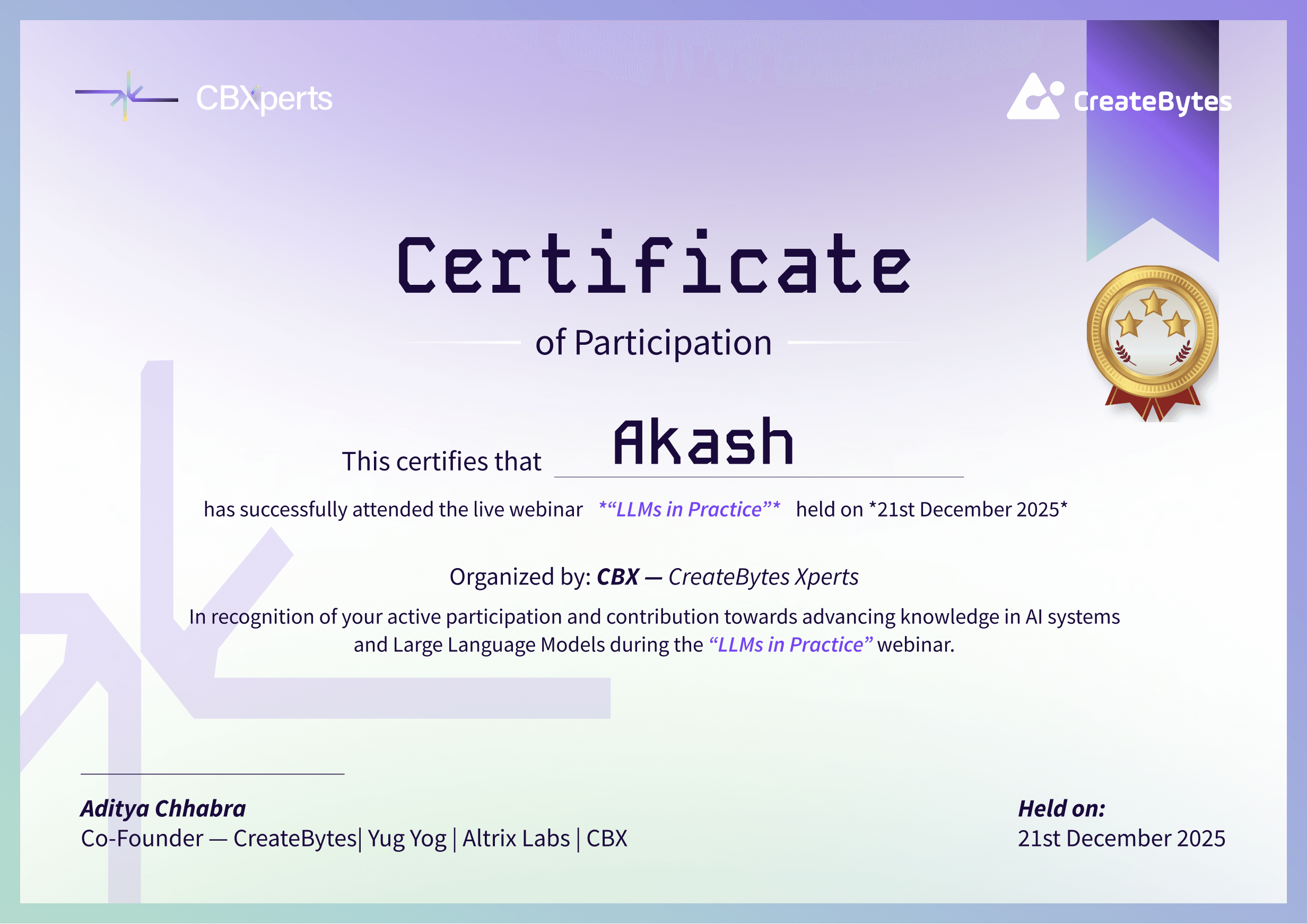

Earn a Verified Certificate of Completion

The CBX experience delivers outcomes that matter at scale.